Read Scaling Websocket Traffic

Using database replication to scale the systemBetter.com has experienced outstanding growth in 2020. Employees and customers have more than doubled. This growth has had a significant impact on our database layer. We did an analysis of traffic and determined that the load on our database is read heavy. So we decided to introduce a read scaling solution. Read scaling consists of setting up database replication from a read-write master database to one or more read-only databases. Writes are then directed to the master database and reads are directed to the read-only replicas.

The problem with read scaling is that there can be a replication delay. The time it takes for a write to the master database to be reflected in a replica is known as replication lag.

Database Replication Lag

One thing to keep in mind about database replication is lag - the time it takes for an update to the master to be reflected in the replica. Ideally, there would be no replication lag and any read query could be directed to a read-only replica without impacting system correctness. In practice - and especially since we are just getting started with read scaling the system - the load on the master database is sometimes high enough that lag spikes regularly occur.

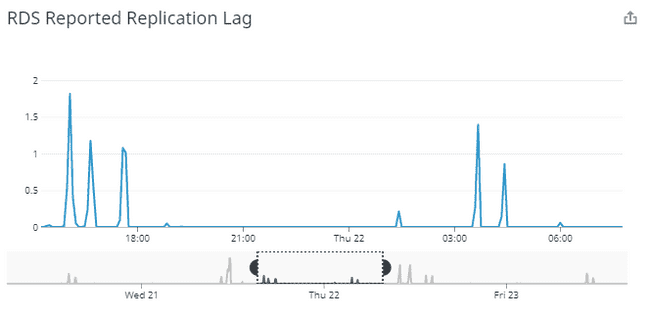

Here’s a recent graph showing the spikes in replication lag that occur regularly in our system (the y axis is in minutes).

Despite only happening a few times a day, we clearly have a problem with lag. A long term goal is to shape the traffic to the master database such that load is never high enough to cause regular replication spikes, but for now we need to design our solution so that it continues to work when replication lag occurs.

Which Traffic Can We Read Scale?

With all this in mind, we set out to find a portion of the read-only query traffic that we can direct to the read-only replicas. It must be traffic that can tolerate occasional lag. This was more difficult than we originally anticipated. Our site is interactive. Changes made often have immediate visible impact on the UI with elements that represent new or changed data in the database and the new or changed data is often the result of a read query that runs after the insert or update statement. Some of this can be optimized, but for now we have a system that often performs read queries immediately after changes.

This pattern is particularly true with the websocket messages we send as a result of things changing on the site. A change message is sent to subscribing clients, and when these messages are received the clients query for the updated resource. Many resources are too large to fully send out inside the websocket message, hence the need for a follow-up query to receive the full change.

But this suggests an idea: what if we could delay our websocket messages by a small amount...long enough that 99% of the time the resulting query can be directed to the read only replica - say 3 seconds - would this work? A 3 second delay would be long enough to cover most replication lag while still being short enough to not have a significant impact on user experience. But what about spikes in lag that are longer than 3 seconds? Such spikes do happen and the consequences of a change not being in the read-only replica are either a 401 or 404 response (the former due to a resource changing visibility as a result of the data change). A 401 response can sometimes invalidate a session and cause the user to have to sign-in again. Too many 404 responses would train our internal users to refresh the page a lot - very bad for scaling! So it’s clear we need to solve for this edge case.

Initial Solution Design

Let’s start by designing a solution that doesn’t solve the > 3 second lag problem and then see if we can change it to handle larger lag times.

Solution design (ignoring > 3 second lag):

- Delay websocket messages by 3 seconds by adding each websocket message to an ActiveMQ queue with a 3 second delay and then in the queue handler sending the actual websocket message.

- Requests from the frontend initiated in a websocket handler get a new query parameter readonly=true.

- Detect readonly=true in the api layer and route any resulting queries to the read-only replica.

Adding in the Edge Cases

How will we change this solution to handle the edge case? The behavior we want when > 3 second lag is occurring is to either 1) increase the message delay or 2) tell the client to not set the readonly=true flag (so that the requests fall back to reading directly from the primary database).

We opted for the latter option since it was both simpler and less impactful to the end user experience.

The solution we came up with is to use the above-outlined approach plus two additional layers of protection.

- In the queue handler, just before sending the websocket message, query the read only replica to obtain the current replication lag. If the lag is too large, send the websocket message with a flag indicating that the readonly=true param should NOT be set.

- As added insurance, in the backend API layer, watch out for 404 requests that have readonly=true. When this happens, try the query again, except route it to the primary database not the read only replica.

Conclusion

We implemented and rolled out this solution, and it’s working great, but there were some interesting challenges along the way. For instance, it turns out that querying for replication lag in PostgreSQL involves taking a spin lock that’s also used internally by the database for atomic replication operations. Querying for lag too much therefore would increase lag! We worked around this by using a redis cache to store the replication lag and updating it as infrequently as possible while still being able to determine if current lag > 3 seconds.

After a few of weeks in production (at the time this blog post was written), the change is proving to work exactly as intended. Almost all websocket traffic is going to the read-only replicas. It’s not a large enough percentage of the traffic to say that we’re done in the read scaling department but the change puts us on a great path for scaling the websocket-based load in our system.

We're Hiring!

Better.com is solving some cool technical problems. Come join us!

Our thinking

Tackling Conversion With Survival Models And Neural Networks

At Better, we have spent a lot of effort modeling conversion rates using Kaplan-Meier and gamma distributions. We recently released convoys, a Python package to fit these models.Thu Aug 26 2021—by Marshal Bratten, Jesse Lax, and Stephen Ma3 min readUsing ImageMagick for PDF redaction

Automating document redaction with a classic command line toolThu Aug 06 2020—by Robert Brownstein3 min readThe Growth team did what to the website??

And you won’t believe the results!Fri Oct 02 2020—by Koty Wong5 min read