Wizard — our ML tool for interpretable, causal conversion predictions

Building an end-to-end ML system to automatically flag drivers of conversionWhen Dorothy reaches Emerald City, she expects to find the Wizard, a mysterious figure whose vast powers are beyond comprehension. When she first meets the Wizard, he is a frightening disembodied head surrounded by fire and smoke. However, upon closer inspection, he is revealed to be nothing more than an old man pulling mechanical levers.

At better.com, we are obsessed with understanding what makes borrowers go from submitting a mortgage application to ultimately funding with us as their direct lender. Our conversion business metrics oftentimes feel like the disembodied Wizard head: untameable and beyond understanding. However, with a little data science magic, we can start to pull back the curtain on what is driving our business.

Introducing Wizard

At a high-level, Wizard is a tool to help us understand what is driving changes in conversion this month. It is interpretable by attributing pp step changes in conversion to pp step changes in specific drivers. It is causal by incorporating controlling features into our model that help cancel out potentially confounding factors to an extent.

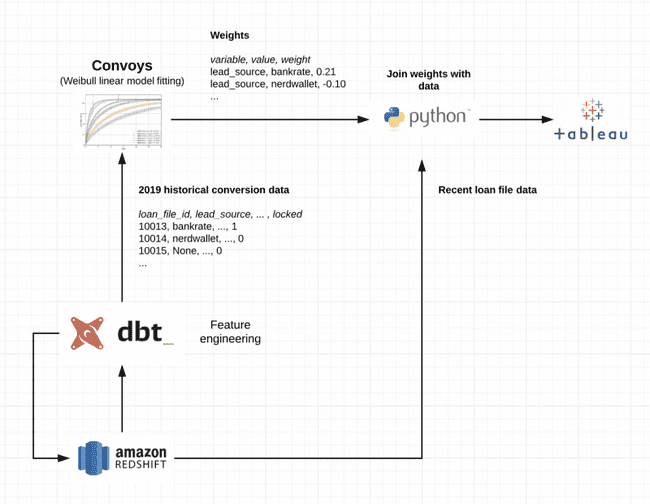

Wizard is a system of three components:

- Feature engineering

- Model fitting

- Visualization

We will cover each of these components and then close with some key learnings.

Feature engineering

The features that drive conversion for our business can largely be grouped into three categories.

- Customer Engagement: We have a large customer support team that handles inbound and outbound calls with our customers. It goes to reason that the stronger our customer support experience, the higher the conversion we observe.

- Example variables: call engagement (e.g. whether or not we spoke on the phone with the borrower), speed to app (i.e. time of application to time of first call)

- Quality of Applicant: Every day several hundreds of borrowers submit a mortgage application with Better. Certain applications are just naturally more likely to transact than others (e.g. those with higher credit). Features within this category help us control for changes to conversion that are related to changes in our overall customer profile.

- Example variables: lead source, credit score, debt to income ratio (i.e. "dti")

- Market Conditions: Other lenders' pricing, Fed rates, and general market volatility all impact our business.

- Example variables: price competitiveness (i.e. the spread between our loan product and Mortgage News Daily benchmark rate)

We use dbt to collect all of these features in a wide format, keyed on loan file, which is our unique identifier for a mortgage application. You can imagine the output of this pipeline is a table like this:

loan_file_id | first_call_at | credit_score | rate abc123 2019-10-01 700 3.5

Materializing our cleaned features directly in our data warehouse (Redshift in this case) has proved to be a strong design choice. In addition to the out-of-the-box testing framework and documentation that comes with dbt, this derived table has helped power other analysis and also helped us iterate more quickly on our model fitting.

Model fitting

At its core, we are trying to predict conversion of submitted mortgage applications into "rate locks", which is a key moment in our funnel when initial underwriting begins. There can be some decent time lag before this occurs, so in order to deal with data we haven't observed yet, we need to rely on models within the survival analysis realm.

Luckily, we've built an internal ecosystem around convoys, our own open-sourced survival analysis library, that makes creating these kinds of models incredibly easy. At a high level, the model fitting step is as simple as:

df = get_apps() # load table from derived feature table model = Weibull(flavor='linear') # use Weibull with linearized loss as our model model.train(df, 'created_at', 'converted_at') model.save('ml.wizard_model') # saves model as jsonb in our database

A few other notes on the model design:

- Training data is based on the past year of historical data

- The model is trained daily, but the "prod" model in our database is only updated if the new model's out of sample AUC is >0.75

- The 'linear' flavor creates an additive model, which allows us to break down conversion into a linear combination of factors. This allows us to interpret the weights like "loan files that had a successful call are 10pp more likely to convert". You can see the loss function in the source code. Using this loss results in slightly worse accuracy, but we think it's a worthwhile tradeoff for interpretability.

- We add a dummy "month" feature that specifies the month the loan file was created. We end up using this coefficient as our "unexplained" feature that captures variation not explained by our model.

- There is some built-in regularization into this model, so we didn't spend a huge amount of time optimizing the feature set.

Visualization

To recap, we now have a derived feature table as well as a model that tells us the weight at which each feature increases a loan file's likelihood of conversion. For example, you can imagine we have something like this in our database:

first_intent.definitely 0.311246 dti.>=0.43 0.081394 had_successful_call.True 0.080334 ... # lots of other feature weights.

This data is fairly easy to scan and get some general insights (e.g. having a successful call increases the likelihood of conversion by 8pp). However, recall our original question is to understand what could be driving conversion today. To get to this, we need to multiply these weights against the differences in each feature's distribution this month versus last month.

Consider this example: say that loan files that have had a successful call have a historical 8pp increase in likelihood to convert (we know this from the output of our model). Now, let's say this month, we were able to engage 10% more loan files than we did in the previous month. It stands to reason that we should expect this month's conversion to go up by .8pp.

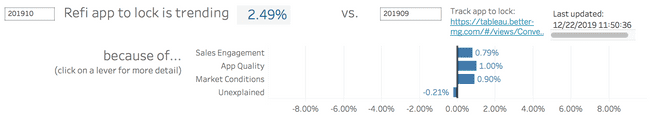

In Tableau, we set up two different views. First is a "waterfall" chart explaining the month-to-month variation split by our three top-level categories. This view functions as the "executive summary" of what is happening with conversion.

We can also take advantage of Tableau's actions to allow deeper dives. A user can click a particular feature to see more detail, all the way down to visualizing the distribution of a given feature over time. This is a nice design pattern: the detail is abstracted away but is readily available if the user requests it. The gif below demonstrates using this tool in action.

In conclusion

Past efforts to understand conversion involved a mad scramble of ad-hoc analysis that typically never revealed anything obvious or conclusive. Though this first version of Wizard is pretty simplistic, it already serves as a dramatic improvement by automating past ad-hoc analysis efforts.

Wizard is currently used by business operations and our customer support team as the first line of analysis in "steering the ship" so-to-speak. We plan on extending a generalized Wizard solution to more conversion use cases at Better.

The final systems design of Wizard looks like this:

Some last thoughts on what I learned from this project:

- Decouple your feature engineering from modeling. Doing our feature engineering in dbt and piping that derived table into our modeling code turned out to a be a huge win. The feature engineering table we built ended up being incredibly popular among other analysts and has also helped us standardize common feature transformations.

- Prioritize an end-to-end solution over a good model. Most stakeholder meetings devolved into endless discussions about new features / data we should add to the model. The modeling is the most fun to talk about, but also the least important in the beginning phases of a project. What's most important is getting the simplest solution working first and then iterating from there.

- Sell hard to the business. So you've delivered exactly what your stakeholders have asked for... you're done right?? Not a chance. Most of the time, stakeholders ask for what they think they want, but they still need help incorporating what you've built into their business processes. The last thing you want is to build something nobody uses, so invest the extra time after the project is done to sit with your stakeholders and train them on how to use what you've built them.

- Interpretability is more important than accuracy. It's tempting to over-optimize for model accuracy, but ultimately your business needs an interpretable model that can be easily actioned on. If you can say conversion is expected to go down by 10% with 90% confidence, but you don't know why, that doesn't really help. But if you can say conversion is expected to go down by 10% with 80% accuracy and the change is likely due to changing market rates, that's much more impactful.

- Causal inference is hard. This project presents a fairly crude way of determining causality. There's a lot of interesting work happening in causal methods that we may draw from for next steps, notably Judea Pearl's new book The Book of Why.

As this project demonstrates, the Better data team practices "full-stack data science", where one person owns the design and implementation of a data science project from beginning to end. If this kind of work excites you, we're hiring!

- data

- python

Our thinking

CloudFront error pages for custom origins

Tue Jan 09 2018—by Liam Buchanan3 min readGrowth in Gurugram

Expanding Our Product, Design, Engineering Teams in IndiaFri Jul 15 2022—by Tung Vo2 min readEngineering a Diverse Team: Taffy Chen and Jimmy Farillo

Software engineers Taffy Chen and Jimmy Farillo launch a new blog series to showcase different perspectives on the Better engineering team, and the ways they’re working to make it even more diverse and inclusive.Fri May 14 2021—by Taffy Chen and Jimmy Farillo2 min read- diversity

- inclusion

- perspectives