Upserts in Redshift

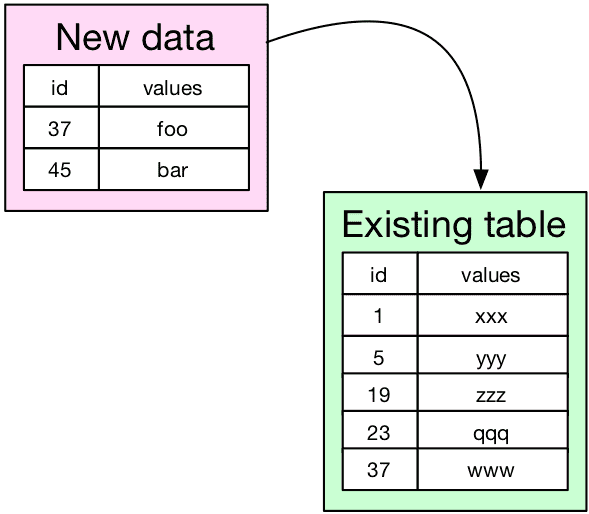

Redshift doesn't support upserts (updates + inserts) but using a few tricks we can implement it anyway.It's pretty common that you want to insert/update data into a new table, but insert or update depending on whether the data already exists. Schematically, we want to do something like this:

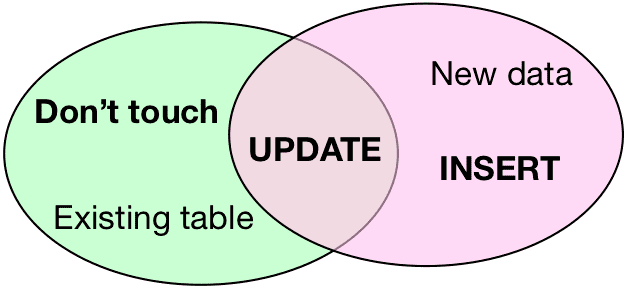

In the case above, we want row 37 to be updated, and row 45 to be added. Just to complement that with a Venn diagram, here's the situation:

Upserts in Redshift

Redshift doesn't support upserts (updates + inserts at the same time) but it can be done using a few tricks:

- Create a temporary staging table and delete entries in the destination table from it

- Removing duplicates in the staging table

The temporary staging table strategy is described pretty well in this blog post. The idea is to insert into a separate table, then delete any overlapping rows (the intersection in the Venn diagram). We can do this using a series of SQL commands:

delete from destination_table using staging_table where staging_table.id = destination_table.id; insert into destination_table (select * from staging_table); drop table staging_table;

Not quite done yet! There's a slightly annoying edge case where we have duplicates in the source table. We can delete those using the following SQL statement:

delete from staging_table using ( select *, row_number() over (partition by id) as row from staging_table ) duplicates where staging.id = duplicates.id and row > 1;

Compound keys

The solution extends pretty easily to compound keys, meaning when you want to use a combination of columns as the key to delete on. Let's say your compound key consists of (id_1, id_2):

delete from staging_table using ( select *, row_number() over (partition by id_1, id_2) as row from staging_table ) duplicates where staging_table.id_1 = duplicates.id_1 and staging_table.id_2 = duplicates.id_2 and row > 1; delete from destination_table using staging_table where staging_table.id_1 = destination_table.id_1 and staging_table.id_2 = destination_table.id_2; insert into destination_table (select * from staging_table); drop table staging_table;

Python code

In case it's useful, here's some Python code that creates and executes the statements for you:

# Note that the child table might have duplicates, so we need to purge those first keys = ', '.join(key_fields) and_clause = ' and '.join(f'{tmp_table}.{field} = duplicates.{field}' for field in key_fields) cursor.execute(f'delete from {tmp_table} using (' f'select *, row_number() over (partition by {keys}) as row from {tmp_table}' f') duplicates where {and_clause} and row > 1') # Now, let's delete the overlapping values in the destination table and_clause = ' and '.join(f'{table}.{field} = {tmp_table}.{field}' for field in key_fields) cursor.execute(f'delete from {table} using {tmp_table} where {and_clause}') # And finally, insert into the destination table cursor.execute(f'insert into {table} (select * from {tmp_table})') cursor.execute(f'drop table {tmp_table}')

- sql

- python

Our thinking

Wizard — our ML tool for interpretable, causal conversion predictions

Building an end-to-end ML system to automatically flag drivers of conversionFri Dec 27 2019—by Kenny Ning6 min read- data

- python

Engineering a Diverse Team: Taffy Chen and Jimmy Farillo

Software engineers Taffy Chen and Jimmy Farillo launch a new blog series to showcase different perspectives on the Better engineering team, and the ways they’re working to make it even more diverse and inclusive.Fri May 14 2021—by Taffy Chen and Jimmy Farillo2 min read- diversity

- inclusion

- perspectives

Modeling conversion rates and saving millions of dollars using Kaplan-Meier and gamma distributions

At Better, we have spent a lot of effort modeling conversion rates using Kaplan-Meier and gamma distributions. We recently released convoys, a Python package to fit these models.Mon Jul 29 2019—by Erik Bernhardsson8 min read